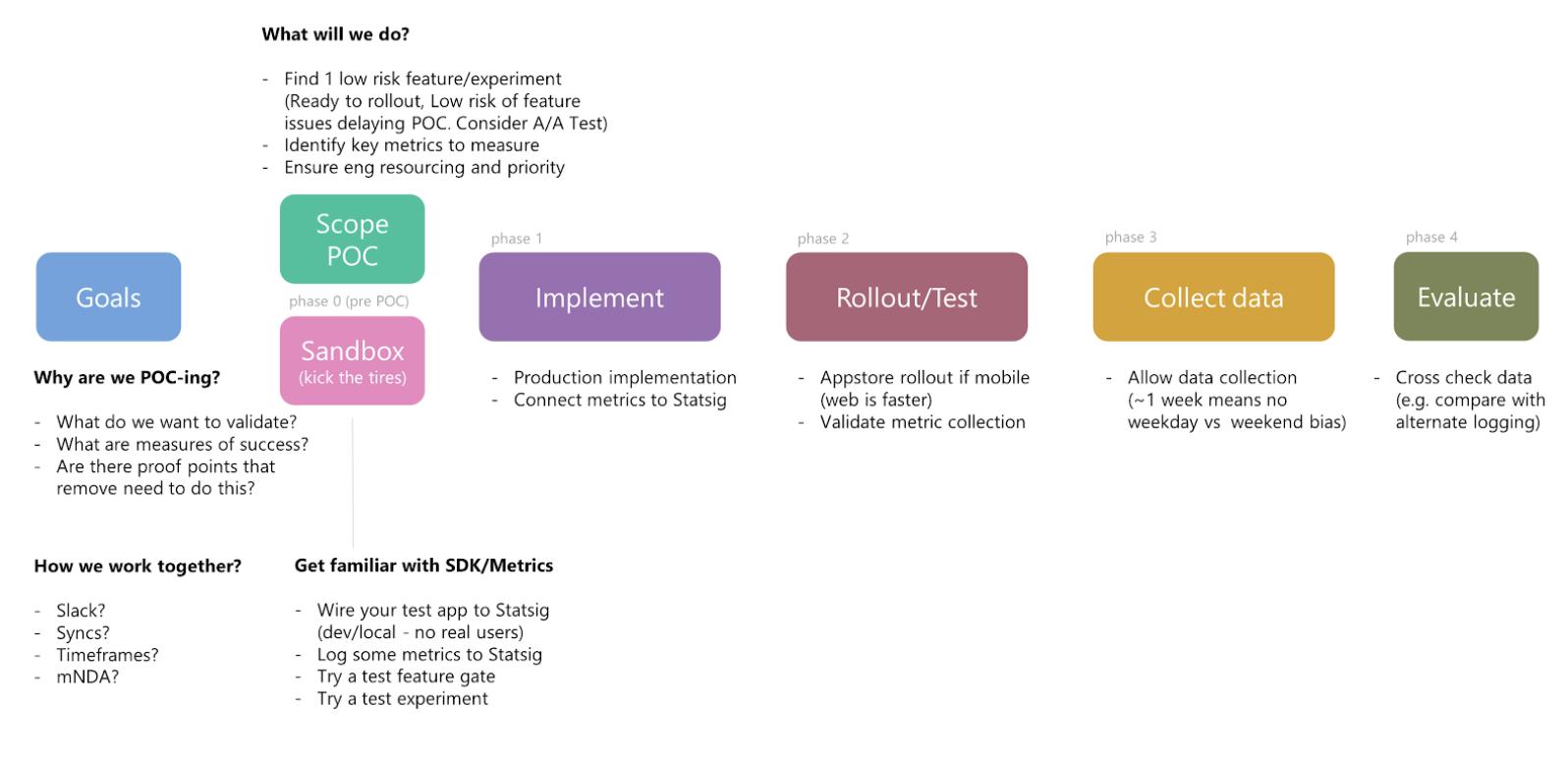

At Statsig we encourage customers to try us out to prove our value by running a proof of concept (or limited test). Typically, this evaluation consists of implementing 1-2 low risk features and/or experiments.

In this guide, we suggest some planning steps which aim to assist in running an effective proof of concept.

You are viewing: What Is The Typical Timeline Of A Poc

Running a worthwhile and meaningful proof of concept requires a bit of planning:

Plan: Define your overall goals & measures of success

Determine why you are creating a POC and how you’ll measure success. A solution is only as good as the problem it solves. Ensure that you are correctly targeting effective measures of success.

Examples:

- “We want to start building out an experimentation platform and have a few ideas for early test experiments.”

- “Our internal feature flagging tool doesn’t have scheduled rollouts and we can’t measure metric impact against those rollouts. We’d like to test Statsig’s feature flagging solution on our new web service and see if the additional features are worthwhile.”

- “I want to see if utilizing your stats engine will free up data scientist time to work on more sophisticated analysis.”

Once you’ve established your overarching goals, you’ll want to target 1-2 low risk features or experiments that you can use to validate the platform.

Examples:

- “I want to test a two new website layouts against the incumbent by performing an A/B/C test and validate that these new changes increase user engagement metrics.”

- “We want to rollout a new search algorithm and measure performance impact.”

- “We’ve run experimentation in the past and want to validate the results of a certain experiment using your stats engine.”

Phase 0: Scope & Prepare your POC

Now that we’ve defined why we’re trying Statsig and we have a few measures to determine a successful implementation, let’s plan out exactly how we’ll achieve our goals.

When choosing what to POC we recommend this thought process as a starting point:

- What/where is the path of least resistance that will allow you to effectively implementation Statsig and test the capabilities you need?

- What does the near-term experimentation roadmap call for? What and where are those upcoming tests, lets PoC one of those! It’s a good idea to PoC something that is representative of the real world use-case.

- Is there are a particular part of your app(s) that are common for testing?

-

Who will be working on this POC?

Statsig is a collaborative tool for data, engineering, and product stakeholders. Ideally each team within your organization is represented during the testing period. If you work for a startup or small organization, these roles might fall under the responsibility of one person.

A typical role breakdown for a Statsig implementation looks something like:

Traditional Team Structure

- Engineer/Data – Role: Implementation and Orchestration

- SDK implementation on server/web/mobile

- Data pipelining metrics into Statsig

- SDK, warehouse ingestion, data tool integrations

- Feature/Experiment orchestration within the code base

- Data analysis on the console UI

- Product – Role: Planning and Execution

- Plan and Implement features/experiments in Statsig console

- Validate test results

- Engineer/Data – Role: Implementation and Orchestration

-

What parts of our tech stack will host the solution and can Statsig support those services?

Statsig is a full-stack solution so we can cover your client, server, and mobile applications in most cases. Ensure the systems you are implementing internally are supported by Statsig. We also integrate with a lot of internal tools that customers are already using today!

-

Read more : What Can You Use For Rolling Papers

Define the timeline for the POC

In order to ensure that you are keeping yourself and your team accountable, we recommend setting a timeline of 2-4 weeks for your proof of concept. This allows you to quickly get up to speed testing and understanding the software, but also ensures you scope the work properly to measuring the value and impact of Statsig in a timely manner.

Example Timeline:

Weeks 1-2

- Instrument Statsig SDK into your app

- Set up metric ingestion pipelines

- Begin rollouts

Weeks 3-4

- Finalize rollouts

- Collect Data

- Analyze Results

- Finalize POC

-

How will we get help when we need it?

We pride ourselves in providing excellent customer support. At Statsig there is no formal ticketing process for support, instead the slack community gives you access to the engineers & data scientists who build the product. This format not only allows for fast response times, but also increases the likelihood of productive discourse and community engagement. We get some of our best feedback there!

Since you’re already here, our documentation makes getting information on implementation easy so feel free to start exploring! The search function is also particularly useful.

Find some suggested starting points for each role below:

-

Engineer/Data

- Client SDK Docs

- Server SDK Docs

- Metrics Overview

-

Product

- Bootstrapping Your Experimentation Program

- Metrics Overview

- Product Experimentation Best Practices has a great overview of the planning and evaluation process

-

-

Where to start sandboxing to get familiar with Statsig?

Signup for a free account here. You’ll be able to immediately access the Statsig platform and start tinkering with the SDKs.

Here’s a list of recommended evaluation exercises in order to get properly acquainted with the platform:

- Your First Feature

- Running an A/A Experiment

- Logging Events

Phase 1: Implementation Steps

Now that you have a good idea of the overall structure and people responsible for running a test and you/your team have become familiar with the platform, it’s time to implement Statsig!

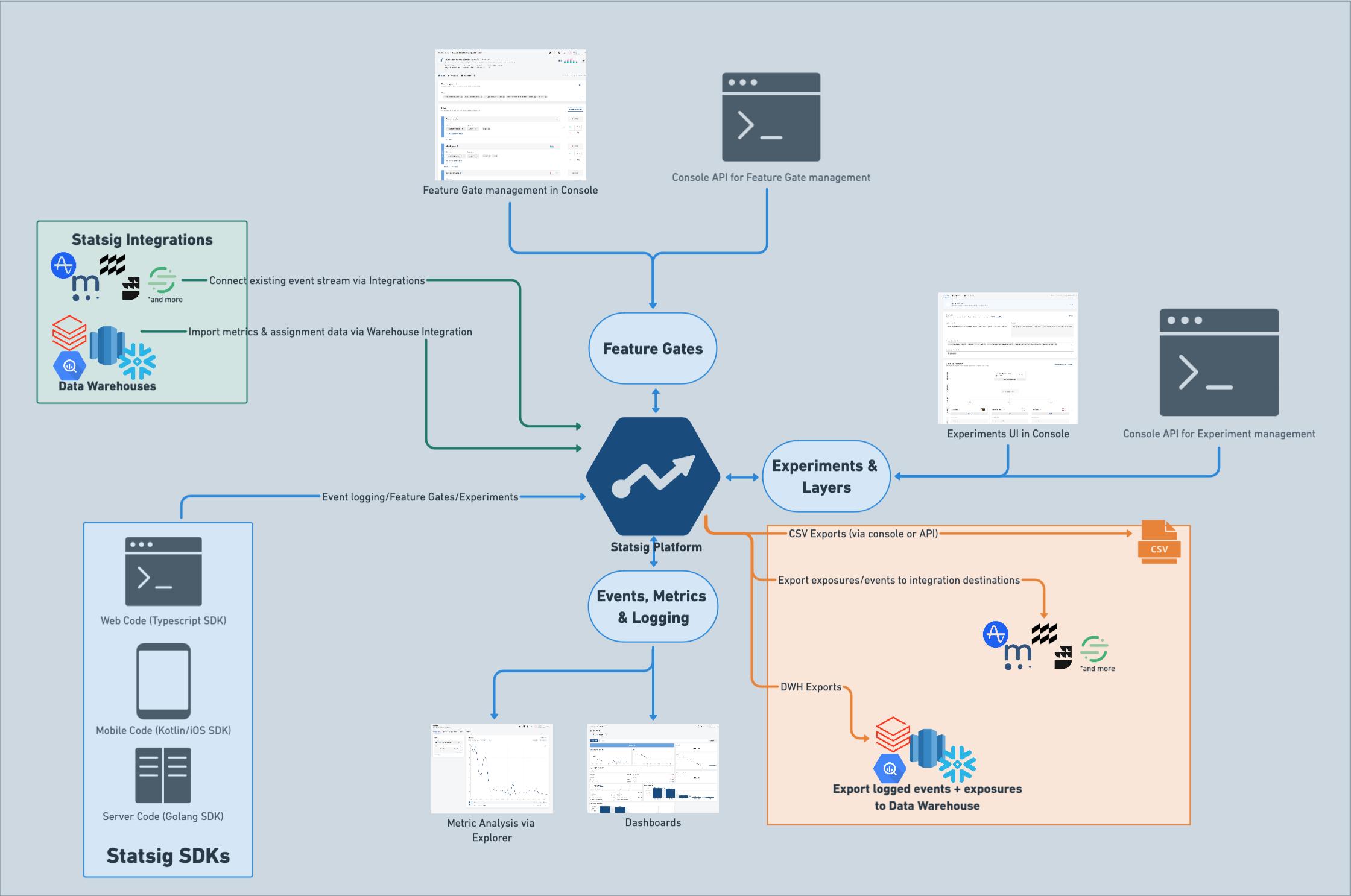

Here’s a general (non-exhaustive) overview of a the statsig platform:

- Metric Ingestion into Statsig

We’ve created a step-by-step guide on metric ingestion here which you can read/pass onto your team to develop a deeper understanding of metrics, but here is the high level overview so you know what to expect:

- Metrics can be ingested into Statsig through several mechanisms

- Server, Client SDKs and the HTTP API

- Integrations

- Data Warehouse Ingestion

- Our new Data Warehouse Native Solution (enterprise contracts only)

- Debug ingestion issues by using the Metrics Dashboard

- You can create custom metrics from your event data for more sophisticated analysis within the platform

- Metrics are joined with your exposure events for experiment analysis Once you have successfully imported data into Statsig, we can move forward with implementation of a feature gate or experiment.

-

Set up a Feature Gate / Experiment in the Console

Now we want to roll our sleeves up and get into the platform. We’ll cover the high level here.

For each feature/experiment, you’ll want to answer a few questions:

- Who are the users you’re going to roll this feature/experiment out to?

- What conditions will we use to qualify users?

- ex: release a new feature to LATAM Android users only

- What conditions will we use to qualify users?

- What are the metrics you’re going to use to validate and/or test your features/experiments?

- What are you hoping to affect with this feature/experiment

- ex: user engagement, performance metrics, etc.

- What are you hoping to affect with this feature/experiment

- If experimenting, what is the hypothesis you’ll validate with the metrics?

- ex: “If I release a new user flow, I expect to see user engagement metrics increase 5-10%”

- Where are you going to be deploying the code?

- Ensure you have a clear idea of where the code will be deployed in order to prevent any delays in implementation

Now you’ll want to go into the Statsig Console and implement your features and experiments! Reminder that we have a few guides that walk through the UI:

- Build your first feature

- Your first A/B test

- Who are the users you’re going to roll this feature/experiment out to?

-

Implement Feature Gate / Experiment in your app

Aside from the console setup, your team must implement the code that is actually being instrumented within your app. Statsig SDKs enable you to record feature/experiment exposures

- Ensure your engineering/implementation team has a clear idea of how to implement Statsig’s SDKs into your software

- Ensure you are sharing the name and strategy for each feature/experiment so your team is on the same page

- Here are some code implementation resources

- Implementing Feature Gates

- Implement an Experiment

Once the code has been implemented, have your team verify that Statsig is properly running as expected. Next, we’ll move onto phase 2 where we will rollout Statsig into production systems.

Phase 2: Rollout & Test

At this point your team has done all the work necessary to get Statsig into your systems and now need to deploy these changes as a release into your larger systems. Typically customers will rollout Statsig changes like any other internal code release, using an internal CI/CD pipeline or the equivalent to deploy the SDKs.

After deployment, you should expect to see some exposure data available in the diagnostics tabs for both features and experiments. If there are any issues, we’re here to help in the slack community!

Here are some guiding pieces of documentation to help:

- Best Practices: Deployment of Feature Gates

- Review the Product Experimentation Best Practices, particularly step 2 (Monitoring Phase)

Phase 3: Collect Data & Read Results

With everything instrumented and rolled out according to the plan above, we must now wait for data to populate into the system. Typically we’ll advise customers to allow 2 weeks minimum for data to be collected and for metrics to move towards statistical significance. You can monitor this process using our log event stream within the metrics dashboard and you’ll also want to ensure your health checks are passing.

- You can monitor your experiments as they rollout

- Reading Experiment Results

Ideally, your results will come back with a clear winner but that’s not always the case. The nature of experimentation and feature analysis leads to interesting insights that can help your product team make better decisions in the future.

Phase 4: Validate Statsig’s Engine

We don’t just want you to take our word for the results, we encourage our users to validate the findings of their results. That’s why we make data exports easy and allow you to compare our stats engine to any internal/existing tools you might be using.

- Validating Data

Once your team has done their due diligence in evaluating the Statsig platform, from the ease of use to the reliability of the analysis and so on, you’ll be able to decide if the tool is the right fit for your needs.

Next Steps

Upon completion of an evaluation, if you found the platform to be a useful tool for up-leveling your experimentation/feature gating processes the next step is to prepare your POC for a full rollout. Follow this guide to see what steps are required to rollout Statsig to a broader production deployment.

- From POC to Production

Source: https://t-tees.com

Category: WHAT